FDA Guidance on Artificial Intelligence (AI) in Medical Devices

In April of 2023, FDA released a draft guidance entitled, "Marketing Submission Recommendations for a Predetermined Change Control Plan for Artificial Intelligence / Machine Learning (AI/ML) - Enabled Device Software Functions."

In today's episode, we speak with Mike Drues, PhD, President of Vascular Sciences, about artificial intelligence in medical devices, the history of this type of technology in the medical device field, and what FDA's guidance means and doesn't mean. We hope you enjoy this episode of the Global Medical Device Podcast!

Watch the Video:

Listen now:

Like this episode? Subscribe today on iTunes or Spotify.

Some of the highlights of this episode include:

- What artificial intelligence means in software as a medical device (SaMD)

- Why this new FDA draft guidance is needed

- Recommendations for medical device companies on what this draft guidance provides

- Challenges with validating the modifications for an ML-DSF

- What specific items to include in a PCCP

- Whether a PCCP potentially impacts the future need of a Letter to File or new 510(k) and what would necessitate an additional market submission

Links:

Memorable quotes from Mike Drues:

"I really try to stress what I call 'regulatory logic,' because if you understand the regulatory logic, really, all of this should be common sense." - Mike Drues

Transcript

Etienne Nichols

00:00:09.360 - 00:03:01.790

Hey everyone. Today's episode is about the FDA draft guidance that came out in April 2023. It's about AI/ML in your software as a medical device.

But it's kind of has a wordy title Marketing Submission Recommendations for a Predetermined Change Control Plan for Artificial Intelligence/Machine Learning AI/ML Enabled Device Software Function.

So, if you hear us use the acronym, I don't think we used it very often, but the acronym MLDSF that stands for Machine Learning Enabled Device Software Functions. There's another acronym. Both are kind of pieced together from the title of this draft guidance and that is PCCP Predetermined Change Control Plan.

It's just a predetermined expectation of what you may be changing to avoid having to do an extra 510(k). That's of course a loose definition. You'll learn more in the episode. We ask a lot of different questions. Why is this new draft guidance needed?

What recommendation for medical device companies does the draft guidance provide? What are some of the challenges with validating the modifications for an MLDSF or AI/ML enabled device?

How should medical device manufacturers be thinking about these specific items of a PCCP and what's the best approach to changes like this in the industry? Talked a lot about a lot of different things. Our guest today is Mike Drues. He's one of the original speakers of the podcast.

He's a medical device professional in every sense of the word. He holds a PhD in biomedical engineering.

He served as an expert witness and is one of the most the foremost regulatory minds of our day, currently serving as the President of Vascular Sciences.

This episode is about the FDA guidance on artificial intelligence and the marketing submission recommendations for those predetermined change control plans. We hope you enjoy this episode. Hey everybody. Welcome back to the Global Medical Device Podcast.

Today with me is Mike Dreus, a familiar name on the podcast, in fact, Mike yeah, we were just talking about the last time you recorded an episode on this topic, which maybe I should mention that topic and artificial intelligence and software as a medical device. But before we get into that, I guess how are you doing today? Good to have you on the show.

Mike Drues

00:03:02.270 - 00:03:06.590

I'm well, thank You, Etienne, and always a pleasure to speak with you and your, and your audience.

Etienne Nichols

00:03:07.550 - 00:03:18.110

What I was mentioning with that, that episode in 2019 we were kind of discussing before we hit record, that was one of the most popular or if the most popular is that right. Of 2019.

Mike Drues

00:03:18.750 - 00:03:57.010

Yeah. According to the Greenlight Guru statistics. The podcast that you're referring to, and we can provide a link to it as part of this podcast.

When your predecessor, John Spear and I talked about artificial intelligence and machine learning, it was the number one podcast listened to in the medical device world of that year.

And since then, obviously, you and I have talked about AI off and on in other circles, but I'm looking forward to sort of revisiting this topic and providing sort of an update as to what's changed, if anything, anything in the last four years.

Etienne Nichols

00:03:57.650 - 00:04:21.250

Yeah. And I suppose what kind of kicked this conversation off to start again is the draft guidance that was released in April of this year.

But maybe before we get into the actual draft guidance, maybe it's worth talking about what artificial intelligence, that phrase AI that's being tossed about everywhere. What does that even mean in software as a medical device? And I'm curious what your thoughts are for better or for worse.

Mike Drues

00:04:22.780 - 00:04:49.120

Well, I'm happy to share my thoughts as always, Etienne, but I'm curious, if you don't mind, let me turn the tables on you just a moment and ask you, because you're exactly right, we see, not just in the medical press, but in the popular press every single day, AI this and AI that.

So, when you think of artificial intelligence and, or machine learning, and there is a subtle but important difference between those two, what does that mean to you? Etienne?

Etienne Nichols

00:04:49.670 - 00:05:44.150

Yeah, it's a good question. So, the way I've looked at it, and I'm, I'm interested to get your corrections or, or approvals here is I've looked at it as almost as a spectrum.

So, machine learning, if we start with machine learning, something that's relatively easy to grasp, I think of maybe I'm, I, I have an app on my phone where it asked me, did you, did you eat lunch today? Did you not eat lunch today?

And, and determine my, my blood glucose levels or something like that on a blood glucose continuous glucose monitoring system or something. And so, it learns it based on my direct input and this very closed data set that I'm providing it and it gives me information.

So, I look at that as machine learning, whereas AI, it's, it's still almost machine learning, but taking from a such a large data Set that it's hard for me to quantify where the data is coming from. And I don't. What are your thoughts? I'm curious. Any corrections you have there?

Mike Drues

00:05:44.150 - 00:06:17.130

Well, I think that's a great start, Etienne. And, and let me ask sort of a, a leading question, a clarifying question here.

Do you think that one of the characteristics of software that claims to have artificial intelligence is that it should be static, that is, it should not change over time? You, you, the, the code is, is, is locked, so to speak, and it cannot change?

Or do you think that it should be able to learn almost in a Darwinian evolution sense? What are your, what are your thoughts on that?

Etienne Nichols

00:06:17.290 - 00:06:25.210

Yeah, well, if it's true artificial intelligence, I would expect the latter. The ability to change and to improve itself.

Mike Drues

00:06:25.770 - 00:08:34.910

And obviously I'm a little biased here, Etienne, and because I asked you my leading question, but that's exactly how I feel.

I strongly believe, never mind as a regulatory consultant, but as a professional biomedical engineer, that this phrase artificial intelligence is a grossly overused phrase, to say the least. There's a, and not just in the medical device universe, but in the, in the entire universe.

I see lots of products, including medical devices, that claim to have artificial intelligence in them. And when I look at them, I don't see any intelligence in them whatsoever, artificial or otherwise.

So, one of the characteristics that I think is important of, as you said, true artificial intelligence is its ability to learn and change and evolve very much in a Darwinian evolutionary sense of the word.

Because if you don't, and by the way, as some in our audience will already know, there's a reason why I'm starting with this, because it's going to go to the premise of the guidance that we're talking about today.

If you don't allow the software to learn and change and evolve, then how is it different than any other piece of static software that's out there, that's been out there for decades? And why do we even call it artificial intelligence?

So, I think that's one of the most important, if not the most important characteristics of true artificial intelligence, and that is its ability to change and to evolve.

And we'll talk more about how to do that and how to, how do we put some limits on that, some barriers on that so that we don't have, you know, pieces of software that get out there and kind of get out of control. I don't, I don't know.

I'm getting a little old, Etienne, and I don't even know if kids anymore read Isaac Asimov and his famous three rows of rules of or Laws of Robotics. But Asimov predicted all of this stuff, you know, over a half a century ago. It's amazing. But anyway, go ahead, your turn.

Etienne Nichols

00:08:34.910 - 00:09:28.340

No, no. Well, it's been a while.

I did read that, and I couldn't quote the three laws at the moment, but maybe I should look those up and we can provide those in a moment here.

But no, it's a good point and I think it's a relevant point to talk about these things, especially because AI is becoming such a buzzword in the industry. And you mentioned the ability to change and adapt.

And that's kind of what this draft guidance is really trying to get at, it would seem if we look at. So just the.

I'll put a link in the show notes to the draft guidance, but the title of it is Marketing Submission Recommendations for a Predetermined Change Control Plan for Artificial Intelligence Machine Learning Enable Enabled Device Software Functions. And there gives us a new acronym MLDSF, Device Software Functions that maybe we need to start familiarizing ourselves with, I suppose.

But yeah, what are your thoughts pertinent to this draft guidance with that ability to change?

Mike Drues

00:09:29.300 - 00:12:21.870

So, when John and I did our podcast that we referred to a moment ago back in 2019, one of the things that we talked about a lot in this, in this podcast was at the time this concept that FDA introduced something called a locked algorithm. A locked algorithm. And at the time, not just in that podcast, but in other venues as well, I publicly chastised FDA for introducing this concept.

I understand why that I that they did it, but I chastised them. And I understand that chastise is a very strong word and I'm purposely using it strong here. And I'll explain why.

For those of that are not familiar with the concept of a locked algorithm, basically the way I like to describe it is we're sucking the artificial intelligence right out of the software.

In other words, we might use, for example, machine learning to help develop the software, but then we remove its ability to change and to grow and to evolve prior to putting it onto the market, aka locking the algorithm, writing it in stone, so to speak, and preventing it from changing.

And in an FDA's defense, and I understand their thinking on this, the reason why they did that is because many of us are concerned about putting products out there that have the ability to change and evolve without any limits whatsoever.

So, I basically said back in, you know, almost five years ago that this should be at best a temporary solution because I saw it was a tremendous limitation on, on industry to literally suck the artificial intelligence out of the. The software. So, let's leave this as a temporary thing.

And the other thing that frustrates me about a lot of folks in our industry, as well as at the FDA, Etienne, and is there's a lot of people that will point out problems, but they don't offer potential solutions.

And once again, going back to that podcast almost five years ago now, not only did I point out in some detail the problems as I saw of the locked algorithm, but I did introduce a number of potential solutions or fixes.

And one of the things you mentioned, the one acronym in the, in the new guidance at the end, the other acronym that I thought was particularly interesting is something called a Predetermined Change Control Plan. A Predetermined Change Control Plan, or PCCP. Now, I didn't use that verbiage back in 2019, but that was one of my suggestions.

Do you want to drill into that now, Etienne, and what that means?

Etienne Nichols

00:12:22.670 - 00:12:23.920

Let's talk about that. Yeah.

Mike Drues

00:12:24.230 - 00:15:00.080

Okay, so one of the solutions, I said to get around this archaic concept, and again, I'm using the word purposely archaic concept of a locked algorithm, is to give the software the ability to grow and evolve, but put some limits, some boundaries on it. So, this, for those of you in our audience that have a quality background, this should not be a new idea for you. This is a. A predetermined validation.

So basically, in a nutshell, the idea is we're going to say to the software, okay, Mr. or Mrs. Software. And by the way, there's another reason why I'm addressing the software this way.

Because if the software is truly artificially intelligent, we should think of its kind of like a person, kind of like a human being. One of the metaphors I sometimes use is a corporation.

In the eyes of the law and in the eyes of the IRS, a corporation is essentially treated as an individual, as a person. So, AI should be thought of or treated as a person. So, we're going to say, okay, software, you can change.

You can vary a particular parameter, temperature or power level or whatever it is, as long as it's between X and Y.

In other words, as long as you want to make this change between X and Y, you can make any change that you want without having to tell the manufacturer, without having to tell the FDA. You just do it as the software, unilaterally.

And the reason why I made that recommendation and the reason why FDA has now introduced it into guidance as a predetermined change control Plan is because we have pre validated that range. In other words, we test the limits.

We do the classic validation where we show that for a value of X and for a value of Y and for a few values in between, that the device will be safe and effective, will be performed as intended, and so on. So that was one of the many solutions that I offered up that almost five years ago.

And to be fair, some of the products that I've been involved in helping to bring to market with artificial intelligence have utilized this concept of what FDA now calls the PCCP, the predetermined Change Control Plan. But long before this guidance was it come out. Does that.

I'm trying not to get too far into the weeds in this, Etienne, but at least at a certain level, does that make sense?

Etienne Nichols

00:15:00.240 - 00:15:19.780

It does, I guess in practice, like a very practical. Do you have a good practical example of how this could work?

Where I mean, you gave the example of the temperature, but how do you arrive at some of these. These to make sure that you have a large enough boundary that you maybe you're providing. I wonder if you have any thoughts on a practical.

Mike Drues

00:15:19.780 - 00:20:04.190

Sure. So, let's try to use a real but somewhat hypothetical example. So, using the temperature as example, imagine a, A cauterizer that you use in. In an.

Or in either a traditional surgery, open surgery, or perhaps laparoscopic surgery. A cauterizer is a device that will introduce some form of energy.

It could be heat, it could be electrical current in order to cut through tissue and at the same time seal the tissue to prevent thrombosis. Sorry. To prevent bleeding. Okay.

Now typically the way these devices will work is there will be a setting, usually like a dial potentiometer, the device where the surgeon will be able to change the temperature, the current, the radio frequency, you know, whatever it is that you're using. Imagine that that button is removed and now it's under software control.

And now the surgeon is cutting through tissue, and it starts out at some nominal temperature. But let's say the device at the same time is sensing how this cutting is going to.

And maybe it's taking too long, or maybe you're still getting some bleeding or something like that. So rather than the physician or the surgeon having to reach to the device and crank up the power, so to speak, the device does it itself.

The device realizes that it's not getting the response that it wants. And so, it gooses up the temperature by 10% or 20% or something. And we will allow the software to do it itself as long as we have Pre validated it.

As long as that the software is making either increases or decreases in temperature or power between that predetermined range, then we know it's okay. We know it's safe and effective. That's during the actual procedure. But where does the real artificial intelligence come in?

This is what a lot of people don't realize, Etienne, because they're looking at these devices being used individually or sort of a one-off kind of a fashion.

So, imagine that the surgery is completed, but the machine, the software, learns that information, that in this particular patient it needed a little more power.

Now maybe when the next patient comes into the room, the device will crank up the power, if necessary, a little bit sooner because of its past experience with the previous patient. And imagine, you know, next week after you've done 10 patients and 20-point patients.

And if you think that's cool, Etienne, and let me take it a step further, why does this cauterizer need to work in isolation?

Why can't this cauterizer then tell its other cauterizer friends that are part of either that hospital or in other hospitals, you know, around the country or around the world, based on its experience? This is what I've learned, right? So, this is where the AI gets really interesting. And this is clearly the future of this kind of technology.

But with the limitation now, with the caveat that at least temporarily, we're putting some boundaries on it. And let me just make one other reminder, Etienne, and then I'm happy to let you chime in because I'm sure you got lots of questions and comments.

A lot of people think that a lot of the challenges when it comes to AI are new. In fact, I see very, very few challenges here that are new. If you understand the regulatory logic.

What we're talking about here are basic prance of basic principles of change management. You know, a special 510(k) versus a letter to file.

For example, how do you decide when do you notify the FDA via a special 510(k) or when do you not with a letter to file a topic, as you know, that gets a lot of companies into trouble. Well, the same logic applies to artificial intelligence and the predetermined validation that I mentioned a moment ago.

The other piece of regulatory logic that comes to mind is what does regulate, what does FDA regulate and what does FDA not regulate? FDA does not regulate the practice of medicine.

So, when a surgeon cranks up the dial on the machine, FDA has nothing to do with that because that's the practice of medicine. FDA doesn't control that, but when the practice of medicine is done by the device, in this case the software, now FDA is all over it.

So, the challenges that we face in AI are really not unique. Many of these challenges we've been facing in maybe different ways for years or sometimes, sometimes even decades. Does that make sense?

Etienne Nichols

00:20:04.350 - 00:20:38.300

It does. And that's a really good. That's an interesting call out to change where the burden lies, whether it's with the physician or with the device.

That's a really. I don't know, I think about that for a little bit. That's really interesting. But I'm curious. So that being said, the device still.

So, let's just use your example for the cauterizer. That potentiometer likely should have been validated from the low point, the high point, maybe an initial starting point. So, there's still.

So, there's precedent for. Or a path forward, it seems, pre-existing. Yeah.

Mike Drues

00:20:38.300 - 00:21:08.920

Correct. And funny you mentioned that, because you're exactly right.

If that range of values on that temperature setting were pre validated, then really, it's even more simple because all we're doing is we're taking it out as a knob and we're implementing it in software.

But at the end of the day, Etienne, from a patient's perspective, from somebody lying on the table undergoing surgery, do you think that they're going to know or even care whether it's a knob or a piece of software as long as the boundaries are the same? Something to think about.

Etienne Nichols

00:21:09.080 - 00:21:09.480

Yeah.

Mike Drues

00:21:09.480 - 00:24:21.410

And one other thing to mention, Etienne, as we dig into this further, and this is a, you know, much continuation of that previous podcast. This is not just a, A re. A rehash of the podcast. We're using that podcast, but we're building and we're going much further. One of the.

How do I want to say this?

One of the frustrations that I have when I look at the labeling of a lot of medical devices today that have artificial intelligence in them, diagnostic devices, for example, and I've been involved in lots of diagnostic devices that have now AI in them.

In the labeling, they'll always put the little disclaimer, they'll always put the little caveat that the software thinks that the patient has this particular disease or that should take this particular drug or something like that. However, and I forget the exact verbiage that we typically use, but something along the lines of the physician can always overrule it.

The physician can always, you know, say no, I disagree, or make a change. And I completely understand from a regulatory perspective why companies are doing that today.

But to me, as a biomedical engineer, that drives me nuts. The reason why companies are doing it today, it's a classic form of risk mitigation.

By putting that disclaimer on there, Etienne, it's essentially making it like sort of an adjunct diagnostic or an adjunct therapy. And the adjunct is a word that we often use in regulatory labeling, quite frankly, Etienne.

And the reason why we do it mostly is to reduce the regulatory burden.

In other words, when we say this is an adjunct diagnostic, basically what we mean by that is this is to be used in combination with other things, including the physician, you know, experience and judgment and so on. And the reason why that's advantageous to a company, Etienne, is because it tremendously lowers the regulatory burden.

In other words, we don't need to have nearly as much data to support a claim for an adjunct product as we would for a primary diagnostic or a primary treatment.

And once again, just like the, the concept of the locked algorithm I talked about earlier, I don't have a problem for now treating these products with that kind of an adjunct. We don't use the word adjunct in AI usually, but the regulatory logic is, I won't even say substantially equivalent. It's exactly the same.

It's a temporary solution. It's a step going in that direction. But eventually, Edin, the future of this technology, in my opinion, is to get rid of that completely.

And the decision that the software makes, whether it's a diagnostic or even a treatment, should be just as valid as a decision that a physician or a surgeon makes. Equal footing, we're not.

Most companies don't want to go quite there yet, although I do have a couple of products that we're doing that, but most companies don't want to do there yet because they would have to collect a heck of a lot more data to support that kind of a claim. And obviously that's going to mean more time, that's going to mean more money, but that's, I hope, where we're going. Does that make sense?

Etienne Nichols

00:24:21.490 - 00:25:09.270

Oh, totally. And I, I agree. I've, I've spoken with physicians on this before and they typically want to push back and they say, well, you'll never.

The, the physician is the diet, the one who finally does the diagnosis. However, that equal footing that you're talking about is really interesting because we'll just use your example one more time.

Let's say that Dr., is cauterizing that, that wound, whatever. They may have a visual, they may have a feed, a biofeedback of its. Cutting slowly and so forth.

Whereas you could have a sensor that actually detects on a much more molecular level and to determine what's actually happening on the very much a deeper level and to have actual better understanding than the physician, especially after a certain amount of intelligent data gathering. I would think so. I totally agree, and I hope that is the case where we are moving forward to.

Mike Drues

00:25:09.860 - 00:27:14.290

And here's another quick example of what I said a moment ago, where a lot of these ideas or this quote unquote challenges that people think that we have with artificial intelligence are new. And as I said a moment ago, they're really not new.

You're probably familiar with robotic surgery and some of the robotic surgical devices that are out there. In fact, in the interest of full disclosure, I'm working as an expert witness in one of the largest lawsuits in that area, product liability lawsuit.

And I'm sure many in the audience can probably guess which company. It doesn't matter. But I think it's interesting that people use the phrase robotic surgery. In fact, it is absolutely not robotic surgery.

It is robotically assisted surgery. And what I might mean by that, in most cases, not quite all, but in virtually all cases, the robot is not performing the surgery directly.

That is not unilaterally.

What's happening is, and you've probably seen this on the tv, if not in firsthand, the surgeon is manipulating the instruments outside of the patient, and the robot is doing nothing more than taking the motions of the surgeon's hands, maybe scaling them up or down a little bit, maybe filtering out some tremors a little bit, but essentially just translating them into the instruments, into the patient. So, it is not robotic surgery in the Isaac Asimov sense of the word. It's robotically assisted surgery.

And the same logic applies to artificial intelligence when we use that disclaimer and the labeling that I just mentioned that this is, you know, what the software thinks. But you, doctor, can, you know, can trump what I think if you want to. That's kind of like robotic assisted surgery, right?

But again, I want the technology eventually and we're making progress, but it's taking a long time so that it's truly autonomous so that it is working, working just like a surgeon. I'm not saying that we're not going to need doctors or surgeons anymore. That's not my, my meaning here. But I think you know what I mean.

Etienne Nichols

00:27:14.690 - 00:27:46.230

And ultimately, it's really just a matter of identifying the other additional inputs that could impact the decision is, is that not accurate? I mean, the doctor may have A certain piece of data that could alter the decision. So that's really what they're referring to.

And maybe identify, you know, I mean, it's a regulatory game, I know, to decrease or increase the regulatory burden.

But identifying that additional input might be helpful in developing the AI and determining what other pieces of AI could help assist this software as well in the future.

Mike Drues

00:27:46.230 - 00:28:50.020

So, yeah, the software, at least theoretically the software, when it learns either pre, pre market, you know, during the development process or as it learns post market, assuming that we get past this archaic concept of the lock algorithm and the software can still learn post market, it should have access, pardon me, it should have access to all of the information that a physician or a surgeon would normally have, not just all of the different variables that might be important for that particular patient, maybe blood tests, maybe imaging data, but it should also be able to draw on its previous experience, just like a good doctor will draw on his or her previous experience, you know, so when I said at the beginning of our discussion, at the end that we should think of this software in almost humanistic terms as an individual, I literally mean that in every sense of the word. Maybe that's a little scary to some people, but.

Etienne Nichols

00:28:51.140 - 00:29:14.720

Well, and, and so the boundaries that we're talking about, because they're the, the goal posts are moving a little bit in my mind in different ways. So, you talked about one way drawing from its experience, drawing from its companion’s experience.

So, another device that's doing the same thing in Australia, for example, it's learning from that, from a cloud connectivity standpoint. But what about other devices that it could potentially be learning as well and learning from those.

Mike Drues

00:29:14.880 - 00:33:50.230

So possibly.

So, yeah, so there's lots, yeah, that's going to introduce, if you're talking about, you know, hypothetically a cauterizer learning something from an EKG monitor or a blood pressure monitor or something like that. I suppose that's theoretically possible. We'd have to, to think about that a little bit.

But the direct learning here is from other of the same devices. It's brothers and sisters, so to speak. But let me take that learning example a step further, if it's okay with you, Etienne.

And because as you know, I like to use really simple metaphors in order to explain very, very complicated topics. So, imagine that you're in a classroom, you know, back in the day in college, listening to a lecture, and I asked you what did you learn?

And you tell me, you know, whatever it is that you think you learned from that particular professor.

And then I asked the same question to the person sitting next to you who listened to the same lecture, do you think it's likely that the person sitting next to you will tell me that they learned the same thing that you did? Well, perhaps. I mean, obviously there's going to be some overlap, but there's also going to be some differences.

Even though you both were in the same room, even though you both listened to the same information, maybe one person was zoning out a little bit, you know, for a few seconds. Not like any of us ever did that in a college lecture. And keep in mind, this is coming from somebody who does teach graduate students part time.

I learned a long time ago not to be naive to think that my graduate students listen and retain every word that I say.

And similarly, I'm not going to be naive to think that everybody that listens to our podcast, you know, listens to and retains every word that we talk about. But nonetheless, the learning experience is going to be a little bit different because of our pre. Our experiences, because of our thinking and so on.

Why can't software, as devices that use artificial intelligence? Why can't they learn from one another just as. Just as well?

You know, and one of the suggestions that I mentioned almost five years ago that unfortunately FDA has not implemented officially yet is if the. So, we talked before, for example, about the predetermined change control plan. And I think that's a step in the right direction.

It's a baby step, but it's a step in the right direction. Here's another step.

If the software, after a particular patient or a particular number of patients realizes that there's another way, a better way, a faster way or a more efficient way to accomplish something, and you don't want to give the software the ability to make that change unilaterally. How about this? How about that software sends a signal, sends a report back to the manufacturer.

And the software basically says that, hey, based on my last 10 or 20 patient experiences, I had the, you know, I got the following result, and I think I, meaning the software, that I can get a better result if I make the following change. And that information is then evaluated by the company just as if the company was going to change the device themselves.

But the recommendation for the change is not coming from the company directly, it's coming from the device. I think this is really a cool idea, don't you? Coming from a. From the device. And then the company decides, okay, is this a valid change?

And they go through all of their change management procedures and their QMS, you know, to validate this change.

And at the end, and they decide then at the end of the day, is this a change that we can handle internally via a letter to file, or is this a change that would require FDA notification via a special 510(k)? Or in the Class III universe, a PMA supplement. Goes back to what I said earlier. The.

The regulatory logic alien is exactly the same whether we're talking about AI or not. You just have to use a little imagination, a little creativity, dare I say it, a little intelligence and how we implement these, these things.

But the logic is the same. And this is why throughout all of our podcasts that we've done, not just today, but all of them, I really try to stress what I call regulatory logic.

Because if you understand the regulatory logic, really all of this should be common sense.

Etienne Nichols

00:33:50.230 - 00:34:23.890

Yeah, I, I like.

So anytime we insert the word artificial intelligence, I like to think, well, what if we put real intelligence behind it just to make sure I understand what you're saying. So, I'm going to use your potentiometer example again.

So maybe the surgeon is saying, you know, if I spike it just barely and, you know, I'm talking about things I don't, I haven't experienced myself, but let's just say as a, as a hypothetical, I spike it just for a moment and it does what I need it to do. And he sends back to the manufacturer, he I think you should increase, allow me to go just a little bit beyond what your parameters are.

It's just another example of what a manufacturer could potentially do.

Mike Drues

00:34:23.970 - 00:35:30.330

It's a great example, Etienne. And not only that, that exact example has happened for decades. Right. Throughout the medical device industry.

As I'm sure you know, physicians will use a product and then they'll say to the company, either to the salesperson that comes to visit them or tell the company, somehow I used to get this from as an R&D engineer all the time, hey, I use your device this way, but if you make it a little longer, a little shorter, a little fatter or thinner, I can use it for something else. So, this has been happening for, you know, since the beginning of time or certainly since the beginning of medical devices.

Now we're just introducing another player into the game. In this case, it's the device, it's the software itself that can make some of those recommendations.

And whether you allow the software to implement those recommendations without any input from the outside world via, for example, the predetermined change control plan, or you allow the software to make the recommendation back to the company and then the company evaluates it and decides, is this a recommendation that we want to implement or not? At the end of the day, you end up at the same place.

Etienne Nichols

00:35:30.650 - 00:36:13.600

Yeah. If we go back to this draft guidance, I don't know if you wanted to speak to any of the specific particulars of it.

We've mentioned the predetermined change control plan, PCCP, I suppose that's an acronym we're going to have to get used to you PCCP, it has three elements that aren't really that surprising.

You know, the detailed description of the specific plan, device modifications, and I have it in front of me to read a little bit of this, the associate methodology, how you're going to develop, validate, implement those modifications in, in the future. And then the third aspect is an impact assessment to determine the benefits and the risk.

So really it goes back to risk management at the end of the day. Any, any comments on the, the bones of the, the draft guidance?

Mike Drues

00:36:14.160 - 00:38:52.310

So, here's a comment that I think is going to surprise some in the audience. You know, we could spend a lot of time talking about the details of those three points.

And, and I agree with those three points that you just mentioned.

I think that, you know, anybody that, you know has an IQ of more than 5, and I don't mean to be condescending, but I'm just simply trying to make a point, you know, that makes sense. But what's the precedent for this? Once again, I'm trying to focus on the regulatory logic.

To me, there's a tremendous amount of regulatory precedent here.

And one of the other examples that comes to mind is from the area of adaptive trial design for those in the audience that are familiar with clinical trials and specifically adaptive trial design. Now this is a topic of a, of a completely different discussion, Etienne, and a much more advanced discussion, to be honest.

But what you just paraphrased and the FDA guidance here that we're talking about on this whole, you know, predetermined change control plan is right out of the adaptive trial design playbook.

The idea for those that are not familiar with it, in a nutshell, traditional clinical trials, everything is locked down, so to speak, before you begin the trial. In other words, the number of patients, the number of sites, the inclusion and exclusion criteria, the, you know, the, all that kind of stuff.

In adaptive trial design, everything is open. You can change almost anything that you want during the actual clinical trial. But there are some caveats to that.

There are some boundaries to that because you can't just change anything you want willy nilly Otherwise that would be cheating.

These changes need to be identified in advance, and they need to be validated in advance, i.e. predetermined or pre validated, whatever you want to call it. So again, the regulatory precedent here, in my opinion is spot on. The regulatory logic.

If we took this guidance on AI/ML device evolution that you're referring to and we scratched out the AI and we replaced it with adaptive trial design, we would largely have the same thing. Does it so, so yeah, I don't know if that's a direct answer to your question.

I'm happy to drill into those key points that you just mentioned a moment ago in more detail, but I'm just simply trying to illustrate that how there's really not a lot here that's, that's new.

If you just kind of think a little bit and if you really try to focus on the regulatory logic and not get so hung up on the letter of the law, but rather the.

Etienne Nichols

00:38:52.390 - 00:39:29.630

Spirit of the law, yeah, that does make sense. Especially when you think about that adaptive process.

You know, you, maybe your product already is changing in the field or, or it has a certain range that it operates under. It makes sense. That being said, I am curious if you have any, I don't know, have seen common validation issues with maybe.

And maybe, maybe the validation isn't the issue. Maybe it's the forward-thinking ability, the ability to know and actually set good goal posts or boundaries for your device.

I don't know, what are some of the challenges? Maybe that's the question I should ask.

Mike Drues

00:39:29.870 - 00:43:01.970

Well, I would say perhaps the biggest challenge in my opinion, Etienne, when it comes to validation, not specifically AI, but just in general. And I'll refer our audience to a webinar that I did for Greenlight probably at least a couple of years ago now on validating your validation.

Right, because a lot of times I see companies, they do a validation, and it turns out that they're validating something that is the totally wrong thing to validate. So, what is the point of validating something if you're not validating the right thing?

When it comes to software and specifically AI or this predetermined validation, whatever you want to call it, pardon me, the underlining assumption is that of course we're validating the right thing, right? So, we use the temperature, you know, example before. There is a litany of other examples.

But the first thing, and it sounds very, very basic, but I see a lot of companies, quite frankly, they screw this up. Ask yourself the question, are you validating the right thing? Or in this particular case, are you allowing your software to validate the right thing?

Here's another practical suggestion again.

When it comes to AI software, most medical devices, certainly not all, but most of them, they have at least a few, in some cases, a lot of different parameters that the user can vary, can control. You know, might be any number of different things.

Maybe you're going to at least start, start by allowing your software, your artificial intelligence, to vary some parameters, but not other parameters.

Probably from a basic statistics perspective, it's going to be a lot easier to allow the software to only vary one or two parameters as opposed to allowing it to Vary, you know, 10 or 20 parameters. Right. If you remember your basic statistics. You know, when you get into.

And I'm going to embarrass myself here, but what the heck is it called when you have multi variable analysis?

You know, when you have a lot of different parameters changing at one time, the way you usually start is you lock everything down and you only vary one parameter and see what the effects are. And then you lock that parameter and then you vary the next parameter.

When you start to vary multiple parameters at the same time, that makes the math and the statistics and as a result the time and the cost really, really gnarly very, very quickly. So as a practical matter, start out with the low hanging fruit.

Start out by allowing your software to vary only the most important one parameter or the most important one or two parameters.

Even in devices that I can think of that have a lot of different parameters that can be changed, most of the time it's one or two that are the ones that the physician will change the most frequent. And maybe ask your users, or maybe ask your marketing friends, hey, pardon me, this device has been out there for a while.

Can you tell me, of all the different parameters that the physician can use to change, what are the one or two most common that they're likely to change? And then the R&D engineer or the software designer, they can design the software to work that way.

That would be another small suggestion I would offer.

Etienne Nichols

00:43:03.330 - 00:43:43.630

That's a good suggestion.

And I kind of thinking, I think I already know the answer to this because you're talking about the regulatory logic and that's a great way to approach this. But if I think about this software as a medical device or whatever medical device that includes AI with it, what about labeling?

I mean, should that even change as the parameters change?

Because when I think just reading through the draft guidance, it's, it's almost as if, okay, I'm anticipating I would have a 5, maybe a special 510(k) or a letter to file in 6 months due to some changing parameters. But since I'm doing this PCCP, I'm not going to, I'm not going to do that. Would the labeling have changed?

And I'm curious what your thoughts are about that.

Mike Drues

00:43:43.630 - 00:47:24.660

Well, let me answer that in two ways. Etienne and I know we're getting close to our time, so we can wrap this up soon. I get a lot of questions.

In fact, I got a question from one of my customers just earlier today on a similar issue. They have a device on the market that is a 510(k). Let's say the device is under manual control. They want to come out with a new version of the device.

The device does exactly the same thing. The new device does exactly the same thing as the old device.

The only difference is that they want the new version of the device under software control, under artificial intelligence. Right. So, they said, can I use my previous device as my predicate? I said to them, theoretically, yes.

But remember, One of the two basic criteria of substantial equivalence of the 510(k) is on the technology side. Any differences in the technology, and clearly manual versus AI is a difference. Cannot do two things.

They cannot introduce new questions of safety and efficacy, nor can they change the overall risk. So those are the two basic requirements of the 510(k).

And I've done lots of podcasts and webinars with greenlight on that for those in the audience that need more information on that. Sure, if we can go to the FDA and say to them, we're adding AI, but it does not change.

Sorry, it does not add new questions of safety and efficacy, nor does it change the overall risk. And then assuming the label claims are the same, then yes, it is possible to make a strong substantial equivalence argument.

However, in most cases, certainly the cases that I, I'm familiar with, I think that's difficult to do. And even if you could do it as a 510(k), your many cases, you might be just simply pushing a bad position.

And in that case, I might encourage the company to flip to the De Novo, because as you, as you know, at the end with a De Novo, you're not constrained by, you know, you, you don't have to play this game of, well, we're kind of like the previous product in these ways, but we're not like the product. You don't have to waste your time playing any of that nonsense. You just say my device is new. Do. We're doing a De Novo. End of discussion.

And let's talk about the cool stuff. So that's the first part of the response to your question.

The second part, in terms of labeling, and I think this is the gist of your question, I think you're asking me is it necessary for us to announce or disclose in our labeling that we're using AI from a regulatory perspective? I would think not. I mean, at the end of the day, what's most important is our label claims.

If we say that our device is going to do X, Y and Z, we need to be able to prove that our device does X, Y and Z.

Whether the device does it via manual inputs from a user or whether device does it autonomously, you know, just by itself, from the user's perspective, you know, who the heck cares?

Now, there are some gray areas like we talked about earlier, where if you're going to say that if the software is going to say yes, the I meaning the software thinks that the patient has skin cancer, for example, but you as the doctor, you know, you can change it if you want to, then obviously you're going to have to disclose it.

But if you're asking me the question, is it necessary to say that, you know, their device has AI from a regulatory or even an engineering perspective, I would say no. However, from a marketing perspective, Etienne, this is exactly why I think most people want to do it.

It's the same reason why, you know, people want to say that they have a laser in their device or, or something like that. Because it sounds, you know, I'm dating myself, it sounds Star Trekkie, you know, it sounds, sounds glitzy, you know. Does that answer your question?

Etienne Nichols

00:47:24.820 - 00:47:44.660

Absolutely. Yeah, it answered my question and more and it was really good. And I know we are at time, but this is maybe, maybe merits for future discussion.

I don't know, we'll, we'll maybe give that to the audience to see whether or not they have feedback, would like to hear more or have specific questions that we'd like to answer. So, thank you so much, Mike, any last piece of advice or words before we go?

Mike Drues

00:47:45.380 - 00:49:33.300

The last two, you know, just at a very high level, the last two reminders are yeah, AI is relatively new. Although as I said before, Isaac Asimov predicted all of this stuff, you know, more than half a century ago.

But I'm constantly reminded of the old French philosopher, I never remember his name, who said the more things change, the more they remain the same, you know.

So, yeah, there are a few differences when it comes to AI and machine learning versus non-AI/ML, but really there's a heck of a lot more similarities than there are differences. So try to understand and focus on the regulatory logic and don't just get hung up on the minutiae of what the guidance says or what the CFR says.

That's point number one.

And then my, my other, you know, long standing piece of advice, Etienne, and to our audience is once you figure out what makes sense to you and you get your ducks in a row, so to speak, and how you're going to handle the AI and how you're going to, you know, train the algorithm and how you're going to, you know, allow for predetermined change control and so on. Take it to the FDA in advance of your submission and sell it to them.

Whether you do it in the form of a pre submission meeting or something else, I don't really care. But sell it to them because so many of the problems that I see companies run into, not only are they preventable, but they're predictable.

And so, you know, don't treat the FDA as an enemy. You know, treat them as a partner.

But remember my, my regulatory mantra, and that is tell, don't ask, lead, don't follow, don't say, don't walk into the FDA and say, hey, I have this new piece of AI. Can you please tell me, you know, how do I test it? Or you know, that in my opinion is, is a terrible approach.

So those are some of my final thoughts, Etienne, and anything that you would remind our audience as we wrap this up.

Etienne Nichols

00:49:33.540 - 00:50:44.340

Oh, this is all really good. I really appreciate it. I don't have anything to add. I think you covered a lot. I have a lot of links to put in the show notes.

So those of you listening, definitely check out the show notes, listen to the previous podcast to get a little bit more background and as well as links to the guidance. So no, this is good. Thank you so much, Mike. We'll let you get back to the rest of your day. Everybody take care. Thank you so much for listening.

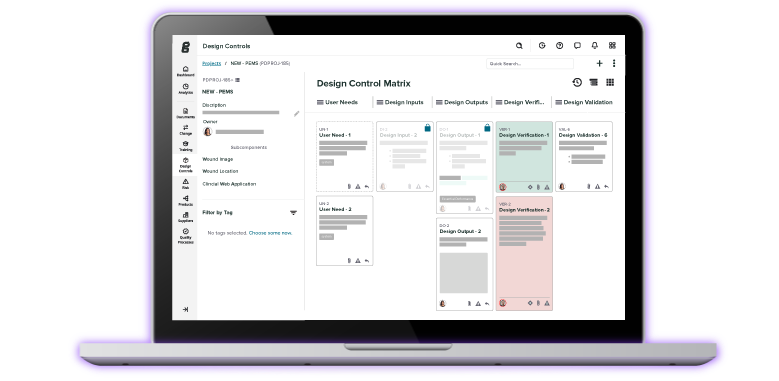

If you enjoyed this episode, reach out and let us know either on LinkedIn or I'd personally love to hear from you via email. Check us out if you're interested in learning about our software built for MedTech.

Whether it's our document management system, our CAPA management system, the design controls risk management system, or our electronic data capture for clinical investigations. This is software built by MedTech professionals for MedTech professionals.

You can check it out at www.Greenlight.Guru or check the Show Notes for a link. Thanks so much for stopping in. Lastly, please consider leaving us a review on iTunes. It helps others find us. It lets us know how we're doing.

We appreciate any comments that you may have. Thank you so much. Take care.

About the Global Medical Device Podcast:

.png)

The Global Medical Device Podcast powered by Greenlight Guru is where today's brightest minds in the medical device industry go to get their most useful and actionable insider knowledge, direct from some of the world's leading medical device experts and companies.

Etienne Nichols is the Head of Industry Insights & Education at Greenlight Guru. As a Mechanical Engineer and Medical Device Guru, he specializes in simplifying complex ideas, teaching system integration, and connecting industry leaders. While hosting the Global Medical Device Podcast, Etienne has led over 200...