In this insightful episode, Eric Henry and Etienne Nichols delve into the evolving landscape of AI in MedTech, focusing on regulatory compliance and the future of AI in medical devices.

They discuss the role of the FDA, FTC, and other regulatory bodies, and explore the implications of AI in product development and quality assurance.

Interested in sponsoring an episode? Click here to learn more!

Watch the Video:

Listen now:

Like this episode? Subscribe today on iTunes or Spotify.

Some of the highlights of this episode include:

- FTC's Growing Role: The FTC may soon have broader enforcement authority over AI across various industries in the U.S.

- Algorithmic Discouragement: A tool that allows the FTC to force companies to delete an algorithm and all its associated training data.

- Evolving Regulatory Landscape: The FDA is adapting its regulatory framework to accommodate AI, focusing on adaptive and generative AI.

- Challenges with Locked Algorithms: Current regulatory frameworks primarily support locked algorithms, but there's a movement towards adaptive algorithms.

- Impact of AI on Quality Systems: AI is set to revolutionize quality management systems and manufacturing processes in the life sciences.

- Importance of Pre-Market and Post-Market Oversight: Both are crucial for ensuring the safety and efficacy of AI-driven medical devices.

- The Role of CSA in AI Integration: The transition from CSV to CSA could influence how AI is integrated into software systems.

- Harmonization of Standards: A significant challenge in AI regulation is the harmonization of numerous standards being developed globally.

- Public-Private Partnerships: Collaborations like the AI Global Health Initiative are vital for advancing regulatory frameworks in AI.

- The Need for Industry Engagement: Active involvement in AI-focused organizations can help businesses navigate the evolving regulatory landscape.

Links:

- Visit Greenlight Guru's homepage for insights on streamlining product development in MedTech.

- Follow Eric Henry on LinkedIn for updates on AI, quality systems, and regulatory compliance.

- Explore the AI Global Health Initiative under AFDO and RAPS sponsorship.

- For questions or consultations, contact Eric Henry at ehenry@kslaw.com

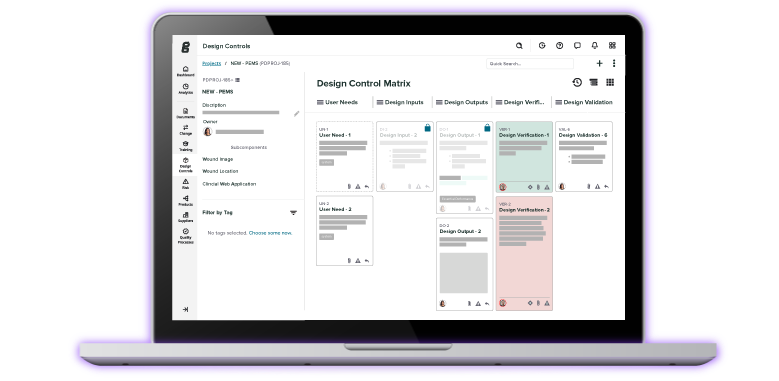

- Learn how Greenlight Guru's QMS Software can help your medical device company.

Memorable quotes:

- "We're seeing pushes into adaptive algorithms... algorithms that modify themselves in the field without human oversight." - Eric Henry

- "The FDA and other regulators are no stranger to the issues in generative AI as well." - Eric Henry

- "Keep an eye on the FTC... they have a tool called algorithmic discouragement, which can have significant implications for AI in life sciences." - Eric Henry

Transcript

Etienne Nichols: Hey everybody. Welcome to the Global Medical Device Podcast. My name is Etienne Nichols. I'm the host of today's show today. With me to talk about AI is Eric Henry. Eric, how are you doing?

Eric Henry: I'm doing pretty good, man. How are you?

Etienne Nichols: Great to have you. I'll give a quick bio, and you can correct where you feel. Eric is a 35-year industry veteran. He's led global technical quality functions at some of the medical device industry's largest players he's now working for the law firm at King and Spalding, and he provides advisory consulting services and focuses on regulatory compliance, enforcement and policy matters involving industries regulated by the FDA. He provides guidance to the industry professionals on FDA requirements, quality assistance requirements, inspection preparedness, and post market obligation.

Also, for those of you who aren't familiar with Eric Henry, I highly recommend that you go follow him on LinkedIn. That's where I get a lot of really fun tidbits about QMSR, AI, whatever is really hot in the industry. Eric seems to have a finger on the pulse. I'll throw one other thing out there just to give a little bit, tie this back to AI, and then I'll stop this. TEdTalk of a bio but he is also a strategic committee member for the AI Global Health Initiative under AFDO wraps sponsorship and co leads the initiative's GMLP working team. So very cool to have you on the show today. Glad to have you. What is going on with AI right now?

Eric Henry: Eric yeah, well, what's interesting, I think, about the landscape in AI right now is twofold. Number one, the sort of locked, traditional AI that frankly has been around since the mid ninety s, is actually becoming old news. We're seeing pushes into adaptive algorithms. We don't yet have regulatory frameworks for those in the device world, but everybody's talking about algorithms that learn. I use that in air quotes, but algorithms that will modify themselves in the field in their use environment without human oversight or without human intervention that prevent them from making those updates.

We all are very familiar with the mushroom cloud generative AI talk that's going on in the marketplace and in the news these days. And the FDA and other regulators are no stranger to the issues in generative AI as well. So, at a big scale, I think we're seeing that. We are seeing some, everybody and their puppy trying to release a policy level document or something along those lines. Instead of ten principles for that, twelve principles for this, six things to keep in mind. And coming out of that, some regulatory work, but most things are still in draft. So, I think that's kind of coming, that's sort of this big push right now. It's a lot of regulatory activity on the cusp of becoming enforceable and real in the lives of industry.

Etienne Nichols: Yeah. So, when you talk about the part that's old news, the artificial intelligence that may not be just as generative as we're talking about now, my understanding is some of those devices, at least when we look at those devices, if you look at the FDA website, for example, most of the devices that claim AI are radiological. I don't know if that's still the case. It's been a while since I've looked at that.

Eric Henry: My first medical device company, actually, back in about 2002 or three, we bought a CAD company, a computer aided detection company. Nobody wanted to say the DMIT diagnosis, but everybody inside the company was going, yeah, we could do that effectively. What that is, and what we're seeing in the radiology space is you just get 1000 or a million or 100 million images and in a particular modality, CT, MRI, pet mammography, for a particular body part or condition, and then you create an algorithm that looks for patterns in those images where a particular pattern would represent something of interest to a radiologist or a physician.

And that's been going on. CAD companies have been around since the 90s. They've been marking areas of interest on images, on screens since then. And a lot of those are initially trained. And the reason we call it old school is not only because they've been around a long time, but because we don't implement active learning or active changes to those systems in their operating environment without going through kind of a traditional software update process like you would get on your iPhone or your laptop. They decide what they want to change, they make a change, they roll it out after they've tested or done whatever.

And so those systems that work that way are just called locked. They're locked algorithms. And the FDA and pretty much every regulator in the world right now in the life sciences will only support locked algorithms, although, as I said earlier, there is a movement towards opening that up if we can figure out how to keep things safe and effective with them changing without human intervention.

Etienne Nichols: Now, the FDA came out with a guidance, the predetermined change control plan. We have a new acronym, I guess, the PCCP.

Eric Henry: That's right.

Etienne Nichols: How does that. Does it still really only handle a locked algorithm?

Eric Henry: Yeah, I'm glad you asked that question, because it's been an area of frustration for me and a lot of people, especially those of us in this strategic committee that you mentioned. That committee is a collaborative community that engages with the FDA directly. And we were really excited before the draft came out because the FDA seemed to indicate that they were going to create a framework that, frankly, would be the first in the world to support adaptive algorithms and find a framework that would allow companies to describe what changes might occur and how you would ensure those changes are safe and effective in the field.

And so long as they stayed within that box, it could make those changes in the field and be okay without having to go through the traditional design controls change management kind of program. So, we were all excited the draft came out and that wasn't what happened at all. What happened instead was the predetermined change control plan still gives you that ability to again draw a box around what changes are going to occur, how you'll ensure those changes will remain safe and effective. And there's a risk management, an impact analysis sort of component to it as well. But then the guidance goes further to say that you must then implement and use formal quality system design controls and design change processes on every modification. You can't very well do that in the field automated without a human intervening.

So that pulls that learning back to the mothership, back to the manufacturer to go through that process, and it really just falls back to a traditional release process. What PCCP allows is where those changes might otherwise rise to the level of requiring a submission, either through the PMA or the 510(k) or the other programs. They wouldn't. If you have an approved PCCP and the modifications that you're processing fall within the rounds of that approved PCCP, you would not have to do a new submission for those changes. You could just process them as a normal design change.

Etienne Nichols: Yeah, I'm glad you brought that up, or I'm glad you clarified that, because I've actually seen a lot of differing opinions on the web on what the PCCP covers. And when I read it, I thought there's no way that's going to cover a change in indication for use down the road as some people seem to think, especially that. Right. That's stretching it way too far. So, with that being said, and you said that the hope was this would be the first in the world where we came out with a way to do this. What is it that, can you describe how they could come out with a framework that could potentially not have to bring everything back to the mothership in your working group? Is there a conversation about how to accomplish that?

Eric Henry: Yeah, we do talk about it. It's obviously purely academic and theoretical at this point and our views of the world. But I could conceive of ways where the FDA might eventually prove or approve or clear automation within a device that through your verification that you do before release, would show that changes again within some boundary would be tested in an automated fashion using an automated testing capability inside the software of the device itself. It could then report back to the manufacturer the results of that test so the manufacturer could confirm that, number one, the changes that it's thinking about are within the constraints that have already been communicated and cleared by the FDA.

And number two, that they passed to such a degree that when those changes are implemented, they don't violate those conditions, and that there's not some compromise to safety or effectiveness or performance in some other way. So, what I see is adaptive algorithms would have to come with some baggage on top of them that would automate a lot of the design change process that we currently bring back to and do in a more proceduralized way inside manufacturers. I don't think we're close to that right this second. I think the FDA is flirting a little even with just using its existing design controls authority, design controls processes, and the PCCP, flirting a little bit with companies that are doing some unaccompanied, unattended changes in the field. But it's one of the reasons why they really stress doing pre submission meetings with them if you can, and if you've got AI in your system, because they're learning as well.

And I do foresee a time when, if a company can come to them and say these are the kinds of changes, they may be fairly innocuous, they may just improve the performance, they may improve the accuracy of the prediction, reduce false positives or false negatives. For instance, if you're in a radiology setting and it's automatically tested, we get a report there's a 24-hour delay before the system implements it. We have that time to review. We can veto that change if it looks like something's gone wrong. Otherwise, we let the system go on its own. That's just a hypothetical, but I can see something like that. The FDA goes, that sounds interesting, let's talk more. But I haven't seen anybody go there yet. And frankly there's risk, there's legal risk, there's all kinds of risk to go with. With a system that's willing to take that first step into the adaptive space.

Etienne Nichols: Yeah, that makes sense. I'm trying to think of an example that we could use. I mean the radiological example is a great use case or example for the locked algorithm. Have you got an example where this might apply to an unlocked something along those lines that would be more unlocked?

Eric Henry: Yeah, you can also use radiology examples there, I think, where if I have a better sense of, let's say I've got mammography use case and you have these microcalcifications which if you've not seen a mammogram look like little white grains of sand, little white specks in an image, then when they begin to cluster, then that could be the beginning of a mass at some future point. And those are the things you want to test and try to remove. And to a radialt, to a person who's untrained, it just looks like little white specks everywhere, and everybody has them in their breast tissue.

That's why you get a baseline at 50 or 45 or whatever the guidance is now. And then they look for movement over time, over the years. Well, if you had some set of images that gave you a sense of what that movement over time might look like as they begin to cluster, and then you have different breast types that are more dense or that include implants or that are different sizes, shapes. Mammography images are some of the most, from patient to patient, differ the most than just about any other modality out there. Right.

Etienne Nichols: Wow.

Eric Henry: So, if you have the more patient data, you have of the widest variety of images and can see beneath, behind, under, around dense, different types of tissue, to see this activity happen, that could lead to something, the more accurate you are. And so, I think that's the kind of thing that we would look for. Whether it's in the cardiology space where I'm trying to predict some event that might occur in a patient, it could be. I know there's work going on right now in the epilepsy space where I can use EEG readings to potentially predict epileptic seizures in advance of them occurring.

So that a severe patient could maybe wear a device that would allow them to be free again and have a warning when something might be coming at a future time. So, these are all the kinds of things that once they're trained on a locked set of data, they have a certain level of accuracy that gets them into the released space and cleared space. But the more they get over time in the real world, the more accurate and the more predictive that those can.

Etienne Nichols: Yeah, that makes sense. Leaving the examples kind of behind a little bit, maybe. I am curious. I didn't get to attend the AI event. I think it was the wraps event in Cincinnati.

Eric Henry: Event, yeah.

Etienne Nichols: Were you at that? I don't know if you had any gleanings from what's going on, even more from the regulatory standpoint and the conversations that are going on.

Eric Henry: Yeah. Yes, I was there. I would have been crucified being part of the committee organizing it had I not shown up. And I was on stage a lot, so that would have left some awkward.

Etienne Nichols: That's what I thought. Okay.

Eric Henry: There was a lot of great discussion there. There was some good workshops where we had people actually have the audience download on their laptops software that would allow them to do rudimentary machine learning algorithms and have some data sets available to them to do some rudimentary things, just to get a sense of what it was like to build an algorithm and use it and train it and validate it and use it in a three hour workshop.

Etienne Nichols: Wow.

Eric Henry: But on the regulatory side, we had some great conversations with people from the Digital health Center of Excellence. Who are the people we collaborate with most? They sent, probably, I don't know, eight or ten people to the summit. I got to moderate a couple of panels that had Troy Tazbaz, who heads the Digital Health Center of Excellence. On that same panel was Dr. Brian Anderson from Mitre, who runs the Coalition for Health AI, as well as Bakul Patel, who was the head of Digital Health Center of Excellence and is now at Google.

And so, we had a lot of discussions there around what the regulatory frameworks are going to look like. Right. What can we build? Where's the dividing line between company responsibility and regulator responsibility? What's the role of standards? And some of the messages that came out were, Troy made the statement that there's 200 organizations out there building AI standards right now.

Etienne Nichols: Wow.

Eric Henry: So, if you have 200 standards, you don't have a standard, right?

Etienne Nichols: Yeah. By definition.

Eric Henry: By definition, standards are great. Everybody should have one. But so that sort of came out where he does we need to work harder at taking those efforts and harmonizing them to where the messaging and the requirements begin to align across all these different efforts out in the field? Troy is also a very big advocate of companies understanding, by virtue of the fact that safety isn't the only risk in the world that a business worries about. Right. There's business risk, financial risk, legal risk, there's all kinds of risk.

And the message that he delivered in particular was that companies need to, if they keep in mind all of the wider variety of risks that they have, that they have to think about, that will drive them into better behaviors than if an FDA just pounds on them all the time, because FDA is going to pound on them in a certain lane in terms of risk. And you may or may not, if you ignore other types of risk, you may or may not meet that expectation. But if you're thinking of legal risk and you're thinking of reputational risk and other things, you might do the right thing just to avoid the consequences of those kinds of risks, too. And, oh, by the way, it makes your product safer.

So that was a big message that came out and I say the third thing that struck me was that the FDA sees AI, especially as we get into adaptive algorithms, as we start thinking into the, thinking about generative AI space, that the regulatory oversight attention will be focused much more in the post market space than it is in the pre-market space. Right. If we have a certain level of confidence in the pre-market space, we would release the product, clear the product, but then a lot of very heavy sort of oversight to make sure that as those algorithms learn and evolve over time, that they remain safe and effective and that they improve in their performance.

Etienne Nichols: Okay, that's a really good point. And it makes a lot of sense, especially with the examples that you gave. That being said, what kind of advice would you have for companies as they're maybe developing some of these or trying to get these to market? With that beginning with the end in mind mindset, I'm going to have a lot more oversight in the field. What are some of the things that I need to be doing? Any thoughts or piece of advice you might have?

Eric Henry: Yeah. And actually, it's not advice that's new or unique to the AI.

Etienne Nichols: I know.

Eric Henry: Yeah. But I would say for those of you familiar with the V model, right, sort of that timeline bit in the middle where the stuff on the left is the design and requirements and architecture, and the stuff on the right is all the testing and stuff that you do. Focus on that left side of the V and don't believe that just because it's AI, that it's a black box. And I don't need to put the details in my designer architecture but create that sort of vertical decomposition from requirements to architecture to design.

And yes, do it modularly, do it iteratively, create sort of component level or object level or modular level, unit level, types of lifecycles that are sort of many spins inside of that. Be as iterative and as flexible as you want to be and can still support with evidence but have those elements in place. And the reason I say that when thinking about the post market attention is because design controls is most design change is most effective when you don't waste time and when you focus on the right things. And the way you don't waste time is by understanding the relationship of all the parts of your system to all the other parts of your system.

You have a good architectural basis for every decision that you make. And when you go back and decide to change something, you know what that thing touches. And through a level of impact assessment, you can target your verification work, you can target all of the potential reportability all the potential submission implications, the design work, the upstream and downstream changes, communications that you may have to make to customers. If you know better what the ecosystem is around that change, what risks may have been impacted.

Without that, you're only left with, I have to test everything every time, and you don't know if you really targeted and focused on the right things and you run the risk of missing something important because you didn't know the nature and the impacts of that change. So, focus on the left side, have that well-structured. It's tough in our industry now because a lot of people coming into AI development in the medical device space are pulling talent from people that came from unregulated industry or a differently regulated industry. And they'll bristle, they'll rebel at the thought of all of the evidence and all the process steps that the FDA and that our notified bodies would require. But it's important and I think it'll lead to a better post market outcome if you'll follow those steps.

Etienne Nichols: Yeah, and I 100% agree. And it's interesting that you say that about pulling talent from other industries, whether regulated or less or differently regulated. I think that's a good way to put it. That's the case that it's been for software as a medical device. And so, this is really not particularly new. And I'm going to circle back to what you said earlier. The advice you have for companies is not necessarily new. You really need to go back to the basics and really master those fundamentals of design controls. But I'm going to go ahead and layer another question on top of that. And that is, is there something new?

And I want to throw an example out. So I was at raps, and one of the plenaries, of course one of the plenaries was AI. Everybody wants to talk about AI right now, and I'm myself included. But the second plenary on the second day was psychedelics from the drug do we, how do we do that? And I listened to them and all of the different considerations they had. It was a risk-based approach, it was safety, it was performance. It was nothing new from a clinical trial perspective than any other drug development. And so, it made me think, okay, with the AI development, there is potential for differences. What are, or are there any differences in your mind?

Eric Henry: I think there are. And the one thing that kind of stands out to me is the speed with which things can happen and the massive amount of data that is going to be used for a lot of the future use cases around AI, especially as we move into the generative space, I think, where currently we have a credible argument that the existing lifecycle models that have existed, the agile scrum type models that have existed since the late ninety s, the traditional software lifecycle models that we see in the IEC standards from 2006 and later, even the design controls guidance from 1997 from the FDA, all of these can still be used. And the FDA has used them. They've publicized how they've used them to clear 700 plus devices with 2030- and 40-year-old regulatory frameworks. I don't know that that's going to be true forever.

Etienne Nichols: Okay.

Eric Henry: And I think what we're going to have to think through is what does a lifecycle model that ensures confidence and is transparent, which is a big buzzword, I know, but it's really that idea that people understand and have confidence in the way you develop the algorithm, the data you trained it to, the data you validated it against, such that when it makes decisions, even if I can't understand each individual decision, I can understand. I have confidence in it because I have confidence in the way it was developed and trained and validated.

So as long as we develop models that maintain that level of transparency and increase that level of confidence, I think we're going to be okay. And I don't know. There are people out there, I think, that have some ideas of what a new lifecycle model might be that might support development of AI models in the generative space that might still be used to show safety and efficacy. They're likely to be much more iterative, much faster moving. I think everything gets developed in much smaller pieces.

I think ownership of data sets and how we deal with where the data comes from, who owns it, how we maintain it is going to be a big issue that's going to influence the model itself. Lots of open questions, I think, in that area that just really have me scratching my head right now. And I think that we just don't know where it's going to go.

Etienne Nichols: It's interesting to be in a space that is so, I mean, like you said, some things are old news, but some things are really new, especially to think about how to regulate this and develop it. What are some of the resources or ways just besides following guys like you and other people in your working group? How do we really learn the right way of thinking about this?

Eric Henry: Yeah, I think getting involved in some folks that are interested as well. There are a lot of public private partnerships that are out there that people can get involved with in their company environments, obviously organizations that we all know very well, like MDIC and Advamed are heavy into this space. A lot of new. In fact, you can't swing a stick right now without hitting a new organization that has interest in AI or health. You know, I know Duke University has the health AI partnership that they work with a lot of health delivery organizations on.

A lot of companies across academia, industry, regulatory bodies, and healthcare providers have joined things like the Coalition for Health AI and the Health AI Partnership. Our group, I think is probably one of the best. If you just are interested and maybe your company or your employer doesn't know, they can't afford to put you in some of these bigger things.

We don't charge anything. We've got a great mix of academia, industry and regulators. We are a collaborative community that, working with the FDA. We've also got connections with ONC, National Academy of Medicine…all these other organizations constantly bonding with. And really the best way for you to get involved is really just go on the website, say I'm interested, and we find a way to loop you in, but find organizations like us or others that have that level of interest.

And here's my biggest piece of advice. Find organizations that do stuff. They don't just write stuff, right? Everybody's got a white paper. If you subscribe to some of the places I've subscribed to, your eyes glaze over every morning as you scroll through all the new white papers on AI. We're writing white papers as well. But those white papers need to lead to some action. They need to develop, turn into tools and models and regulatory frameworks and training something that companies can use.

So, find a group that does stuff and join that group and add your thinking to the mixed. And if you find a good group, and I think, again, ours, I think, is a good example of this, we have a wide range of people that are just figuring out what AI is all the way to, people that are data scientists and do this every day. So go in new, learn and then contribute. Once you learn enough and you think you've got something to contribute, I can.

Etienne Nichols: See that being a good blend because they'd be able to be the translator, I guess, to the voice of the industry, to those who maybe are just entering the industry at the very beginning. So, I do have another question, though. We talked a little bit about the product itself, the medical device itself, but that's not the only place AI is going to exist in the future.

I know recently, I guess this year, I look at this year and I think, wow, we had so many different regulatory changes. That's probably the case every year, but this year felt especially that way. CSV to CSA was one of those things. Computer software assurance. Is there any impact or what are the impacts do you think AI will have on just the software that surrounds the development of a medical device?

Eric Henry: Right. Well, first of all, I think the CSV to CSA transition is a great way for consultants to make a lot of money. Yeah.

Etienne Nichols: Should have been risk based.

Eric Henry: Yeah. But I think in the end, it's probably not going to be that traumatic of a transition. In fact, if you listen to the FDA guys that were involved in that guidance talk, most of them are know the core message is lighten up, people. But that being said, the application, in fact, I'm involved, as you said earlier, in the good machine learning practices working group, which focuses on AI and products.

We have another working team that is, we call AIO, AI and operations, and it focuses on what you just talked about or asked about, which is AI in the quality system and AI in manufacturing. Both of those spaces, by the way, cross the streams in terms of medical device pharma, all the FDA regulated industries, from tobacco to food to veterinary products to cosmetics. This is something that will span across all those disciplines, all those industries. In terms of its relevance, I see a significant push and a lot of work being done, the manufacturing space and the pharma side especially. We know that the FDA's published out of Cedar, the Center for Drugs.

They published a couple of white papers on drug discovery and drug manufacturing and the use of AI there, where they're trying to get their thinking going. They seem to be running about five years behind, four or five years behind the center for devices, which does my heart good because I'm a device guy. Always nice to be in front. But on the QMs side, what we're seeing, and again, keep in mind that on the drug side, some of the manufacturing work becomes part of the NDA, part of the drug application.

Right. So, we will see clearance of AI technologies in that manufacturing space, although there's not the kind of regulatory framework in the drug space, even as there is if you take the 2030-year-old device regulations and standards to support that technology in a drug application. Right?

Etienne Nichols: Yeah.

Eric Henry: So, the drug regulations don't hit the technology. Even if I go to existing frameworks in the device space, I have something I can lean on to, say, use these kinds of lifecycles, have these disciplines in place. I don't necessarily have that on the drug side, but they are going all out, some of these companies, on putting AI into the manufacturing place.

Etienne Nichols: Really? Okay.

Eric Henry: And then I see on the quality system side, there are some obvious low hanging fruits, right. We have complaint management where I can detect patterns and find common complaints and do better trending and things of that nature through machine learning as it applies to something in the complaint space. Both the FDA and industry, I think, are starting to look at AI for things like predicate device identification, literature reviews, for clinical review, also for clinical evaluations, and also for a risk benefit analysis. So, there's a lot of places where AI is going to come to bear. I think in the quality system side, manufacturing is really becoming a hotspot for this and in my view, a hotspot that's still struggling with how the regulatory framework will support it. But yeah, it's not all just on the device side for sure.

Etienne Nichols: Yeah, some of those things like complaints and so forth. Is it going to be similar to how we just kind of talked about devices, how it really is different things change so quickly, so the regulatory framework is going to have to catch up and potentially evolve to handle all these things. Is it going to be similar? Know, the CSV CSA, tongue in cheek, same difference. Is there going to be an evolution to handle AI or do you think things are relatively set to be able to handle the injection of AI for certain things like complaint handling and other additions to QMS?

Eric Henry: Yeah, I think it's going to get tricky in Frac. This is one of the ways where CSA will help because the CSA draft guidance, which by the way is still a draft, everybody should remember that the CSA draft guidance says specifically that the FDA will focus this enforcement work. And to paraphrase on systems that are directly related to the safety of the device and effectiveness of the device or the product.

Etienne Nichols: Okay.

Eric Henry: And so, things like, I don't know, CAPA management or some other trending type thing or manufacturing yield or something along those lines, if it doesn't impact the safety of the product or the effectiveness of the product, performance of the product, you might be able to move faster in the deployment of AI technologies there. Especially if you start thinking about adaptive technologies or even autonomous technologies, right.

That do their workday to day work without human intervention in that space, because from a regulatory standpoint, you would still be in violation of the regulations. 21 CFR 820 70 I is the place in the regulation where it says if you have a computer system that automates manufacturing or the quality system, you have to validate it according to an established protocol that you have to validate all changes before they're implemented. That language is very explicit. Right.

And it's in the device reg. And if you look at ISO 1345. So, as we migrate, you and I've had some little get together before on that migration to 1345 in the QMSR. The proposed rule that's out there now that we're expecting any second now, as a final rule, as we record this, it is at the White House for final regulatory review before publication. That standard, in its 2016 edition, had several aspects of it that were intentionally aligned with the FDA regulations.

Software validation for non-device software was one of those things. And so, its language now mirrors much of what we see in the US language, which says validate changes before you release. Okay, this gets to be a problem if you're not going to do that with an adaptive algorithm. So, I think the fact that FDA's enforcement will focus on product impacting systems that are higher risk doesn't make it compliant but may give you some wiggle room to try out these new technologies that you wouldn't have if the FDA was as broad based as we've seen under the CSV mandate.

Etienne Nichols: Interesting. Okay. Wow. I didn't expect to be as wide ranging as we've been, and apologies for that. I don't know if you were excited either, but you've made me think, because I've been seeing some of the murmurings about the potential for ISO 1345 2016. Time for feedback from the industry to potentially revise this, which, if you rewind the clock to 2022. When they first started proposing QMSR at that meeting with FDN industry, they said, yeah, won't be updated for another five years, guaranteed. And now here we are. QMSR is on the cusp. Is that one of the driving factors? Maybe not the driving factor, but it seems like it has to have something to do with the AI on the landscape here.

Eric Henry: I think AI is going to. You're going to see it pop up in a lot of places, right? I mean, the FDA's pre-market software guidance, as it was finalized in 2023, it said, oh, yeah, this applies to AI, too. And the pre-market software guidance, it was also finalized this year in its newest revision. Oh, yeah, that applies to AI, too. They had to insert that sentence in a couple of places in both of those.

So, I think it's possible. I don't know where this will end up. Yeah. Speculation possible that the regulation, the standard, would allow for that. Here's the problem, though. The QMSR proposed rule is specific to the 2016 revision and a modification that would allow it to go to a revised 1345 would require the rulemaking process to start all over again. And for those of you that have been on the ride, like me, from the beginning, it's measured in years. And so, by the time that hit the street in the US, they'd be ready for the next revision of 1345.

Etienne Nichols: Maybe this is a conversation that isn't. We don't want to give anyone a stroke or anything, but that's really interesting to think about. So, we're talking just while we're on the subject of standards. I can't help but think of what you said, Troy said, about the 200 plus standards that are being developed out there. Do you see on the horizon or what the timeline could be for a unified standard as it applies to AI and medical devices?

Eric Henry: I foresee increased focus in that area. Its success is very much up in the air. But I can tell you that the FDA, although you might not get this message if you saw recently that they left the, was it global harmonization working party or something like that, this Asia focused sort of international regulators body, and there were some reasons that they left that don't have anything to do with their priorities so much as the level of involvement they had in that group.

But the FDA is very focused, and I'm also on the MedCon committee, which meets every spring, and the FDA sends a large group of maybe 40, 50 people to that every year. We get a lot of insight into some things that are coming up during that conference. I would encourage people that are able to. To maybe try to try to make it to that. But harmonization and mutual recognition are very high on the FDA's priority list. Involvement with IMDRF, which, by the way, they chair in 2024, is a big deal.

The International Medical Device Regulators Forum, involvement through ANSI in the work of ISO and IEC, very high on the list. As you know, if you buy or use Amy standards, the association for the Advancement of Medical Instrumentation, the FDA, on any significant document that they write, is usually, if not a co-chair, at least a member of the committee.

They are very interested in this harmonization work. So, they're part of that 200 so OD people developing standards. But I think they're involved in each of these with an agenda in mind, and that is to promote harmonization. And I think I see even in some of the proposed rules that other HHS departments are putting out, like the ONC, the Office of National Coordinator for Health, it, they are citing industry papers and thinking in the rule, in the regulation, as drivers for some of their language, they are trying to bring all of this together and harmonize that language. So, I do see those efforts now.

There are always exceptions that make us wonder if this is going to play out well. How involved or interested is the FDA in adopting something that would conform with the EU AI Act? I don't know. Yeah, I don't think so much because there's a lot of us in the industry see some overreach in the. So, I think there is a lot of interest in harmonization. I don't know how successful it will be, and I think it'll be two steps forward, one step back for a while.

Etienne Nichols: Yeah. Well, very cool. I appreciate you coming on to have this conversation. Any last pieces of advice or words or pieces of news you'd like to share? Any last bits before we close out?

Eric Henry: I will share one thing, and it's something that, as I talk about the current and future state of AI regulatory work in the life sciences that I ask people to think about and focus on that they may not have before. And that's the Federal Trade Commission, the FTC, they have enforcement authority, and the Senate has proposed legislation that would give them more broad enforcement authority over artificial intelligence across industries in the United States.

They have already brought to bear this enforcement authority in other industries with large companies where they can impose fines, they can do a lot of the things that the FDA can do to people in the medical device industry. They also have a tool at their disposal called algorithmic discouragement. And this tool allows them to force a company to delete its algorithm from everywhere it exists. And all data that was used to train or validate or to further educate that model, all data is gone, and the algorithm is gone.

Algorithmic discouragement, keep an eye on the FTC, keep an eye on what they're doing and where they're focusing their attention, because I think it's only a matter of time before they pick a large medical device or life sciences company and decide that the advice that AI system gave was misinformation to a consumer and would then pursue and start using their enforcement tools in that area. So, it's just another thing, yet another acronym to keep your eye on as time goes on.

Etienne Nichols: Very interesting. Yeah, I appreciate you sharing that. That's really great. Where do you recommend people if they wanted to reach out to you? I know LinkedIn will put links in the show notes, but where can they find you?

Eric Henry: LinkedIn is a good one. And again, I try to post things that help the industry where I can. If you want to know places I'm speaking or will be speaking. I don't typically post that, but it's in my profile. You can look there in the featured section. ehenry@kslaw.com is my work email address. Feel free to reach out to me there and who knows where I'll pop up next to talk about some of these topics.

Etienne Nichols: Well, fantastic. Well, hopefully we'll meet in person at MedCon. And yeah, it's been great getting to talk to you. Those who've been listening. You've been listening to the global medical device podcast. We recently did a webinar with Eric on QMSR. Well, I say recently, it may have been a month or two ago, but we're just sending it out again just as a reminder now that we're kind of on the cusp of that going live. So, look out for that. We'll put a link in the show notes as well. And thank you so much. You all. Y'all have a good rest of your day.

Take care.

Thank you so much for listening. If you enjoyed this episode, can I ask a special favor from you? Can you leave us a review on iTunes? I know most of us have never done that before, but if you're listening on the phone, look at the iTunes app. Scroll down to the bottom where it says leave a review. It's actually really easy. Same thing with computer. Just look for that leave a review button. This helps others find us and it lets us know how we're doing. Also, I'd personally love to hear from you on LinkedIn. Reach out to me. I read and respond to every message because hearing your feedback is the only way I'm going to get better. Thanks again for listening, and we'll see you next time.

About the Global Medical Device Podcast:

.png)

The Global Medical Device Podcast powered by Greenlight Guru is where today's brightest minds in the medical device industry go to get their most useful and actionable insider knowledge, direct from some of the world's leading medical device experts and companies.

Etienne Nichols is the Head of Industry Insights & Education at Greenlight Guru. As a Mechanical Engineer and Medical Device Guru, he specializes in simplifying complex ideas, teaching system integration, and connecting industry leaders. While hosting the Global Medical Device Podcast, Etienne has led over 200...